Speakers

|

|

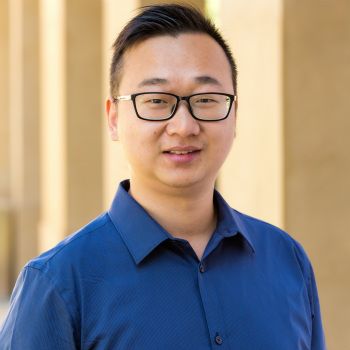

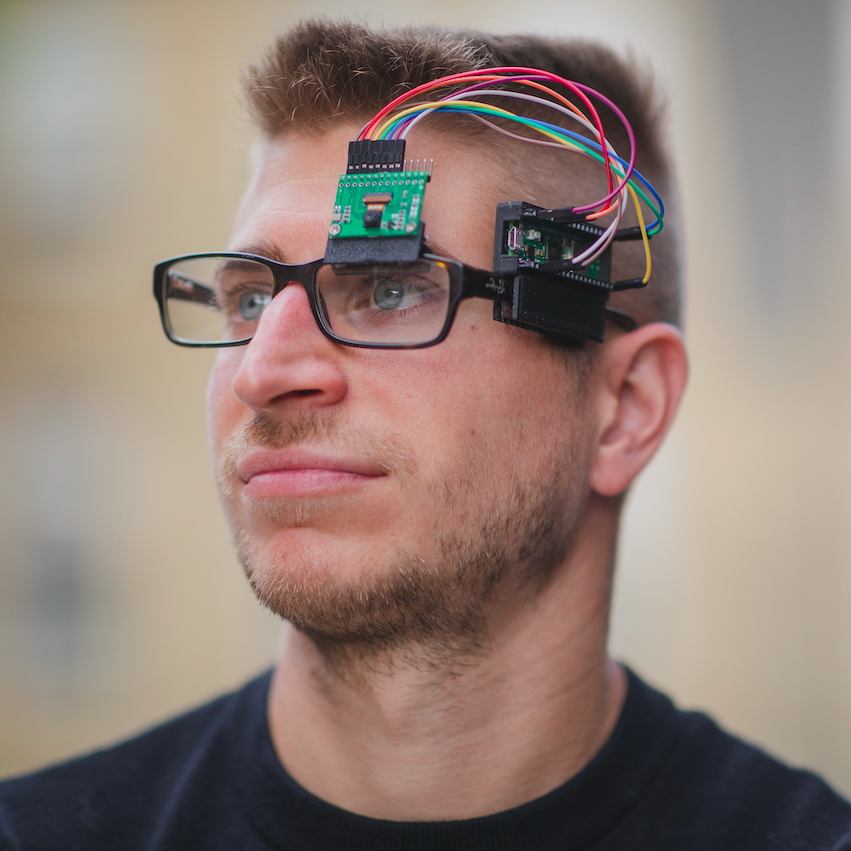

Talk Title Aria Glasses: An open-science wearable device for egocentric multi-modal AI research Talk Description Augmented reality (AR) glasses will profoundly re-shape how we design assistive AI technolgoies, including healthcare robotics, by harnessing human experience and training powerful AI models using egocentric data sources. We introduce Aria, the all-day wearable, socially acceptable form-factor glasses to support always available, context-aware and personalized AI applications. Its industry-leading hardware specs, multi-modal data recording and streaming features, along with open-source software, datasets and community, make Aria the go-to platform for egocentric AI research and are quickly accelerating this emerging research field. In this talk, I will give an overview of the Aria ecosystem, recent advances in state-of-the-art egocentric machine perception, available open-source Aria software tools, as well as discussing the challenges and opportunities related to wearable/healthcare robotics research. Speaker Bio Dr. Zijian Wang is a Research Engineer at Meta’s Reality Lab Research division. His research interests include Augmented Reality (AR) glasses, 3D computer vision, contextual AI, hardware-software co-optimization, and robotics. Prior to joining Meta, he obtained his Ph.D. degree in 2019 advised by Prof. Mac Schwager from Stanford University, where he worked on planning and control for multi-robot systems. |

|

|

|

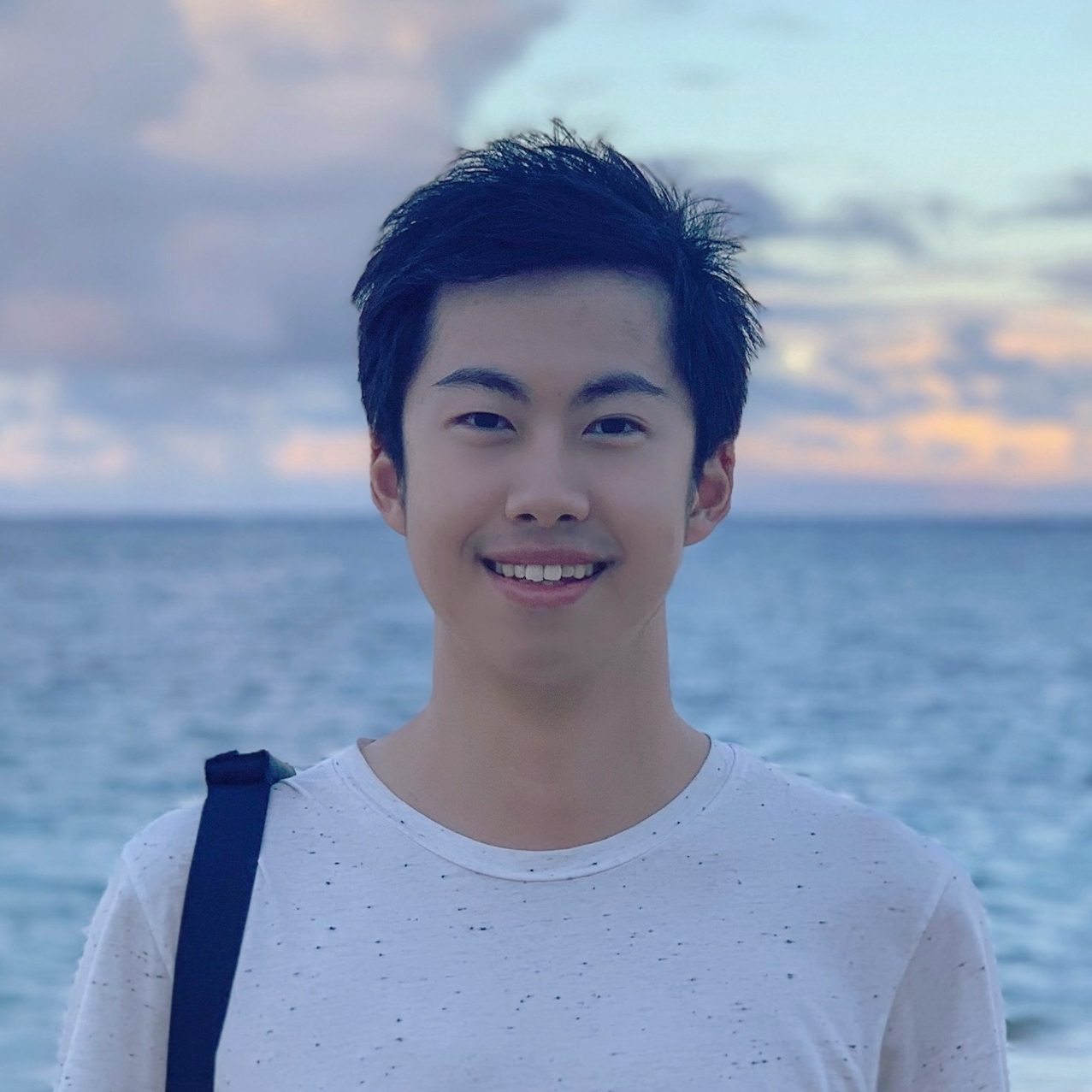

Talk Title From Clinics to Homes: Assessing Disease and Health with Everyday Devices Talk Description Today’s clinical systems frequently exhibit delayed diagnoses, sporadic patient visits, and unequal access to care. Can we identify chronic diseases earlier, potentially before they manifest clinically? Furthermore, can we bring comprehensive medical assessments into patient’s own homes to ensure accessible care for all? In this talk, I will present machine learning methods to bridge these gaps with everyday sensors. I will first introduce an AI-powered digital biomarker that detects Parkinson’s disease multiple years before clinical diagnosis, using just nocturnal breathing signals. I will then discuss algorithms for contactless measurement of human vital signs using smartphones. Finally, I will discuss the potential of AI to realize passive, longitudinal, and in-home tracking of disease severity, progression, and medication response. Speaker Bio Yuzhe Yang is a Ph.D. candidate at MIT. His research interests include machine learning and AI for human diseases, health and medicine. His research has been published in Nature Medicine, Science Translational Medicine, NeurIPS, ICML, and ICLR, and featured in media outlets such as WSJ, Forbes, and BBC. He is a recipient of the Rising Stars in Data Science, and PhD fellowships from MathWorks and Takeda. |

|

|

|

Talk Title MAESTRO: Multi-sensed AI Environment for Surgical Task and Role Optimisation Talk Description A fitting analogy for the modern operating theatre is that of a multiverse formed by a cluster of smaller universes: those of patient, multidisciplinary surgical team and operative environment. If we wish to fully appreciate all aspects governing surgical performance, in and around the operating theatre, we need to adopt a holistic approach and consider all universes in parallel. Inspired by the dichotomy between classic and quantum physics, we postulate that events/ripples in one universe can be detected in other parallel universes. This approach unifies the human, the physical, and digital entities which are present in the operating theatre under a single framework. The MAESTRO vision aims to lay the foundations for the operating theatre of the future; a surgical environment powered by trustable, human-understanding artificial intelligence able to continually adapt and learn the best way to optimise safety, efficacy, teamwork, economy, and clinical outcomes. One could think of MAESTRO as being analogous to an orchestra conductor, overseeing a team working towards a common goal together, in unison. Speaker Bio George is the lead of the Human-centred Automation, Robotics and Monitoring in Surgery (HARMS) lab at The Hamlyn Centre, Institute of Global Health Innovation and Department of Surgery & Cancer, Faculty of Medicine, Imperial College London. He is leading research in the areas of surgical robotics, soft robotics, minimal access surgical technology, perceptual human-robot and human-computer interfaces, smart data-driven operating theatres. He is a member of the European Association of Endoscopic Surgery (EAES) Technology Committee and of the EAES Flexible Technology subcommittee. |

|

|

|

Talk Title Social Rehabilitation Network Talk Description Speaker Bio Dr. Etienne Burdet (https://www.imperial.ac.uk/human-robotics/) is Professor and Chair of Human Robotics at the Imperial College of Science, Technology and Medicine in UK. He is also a visiting Professor at University College London. He holds an MSc in Mathematics (1990), an MSc in Physics (1991), and a PhD in Robotics (1996), all from ETH-Zurich. He was a postdoctoral fellow with TE Milner from McGill University, Canada, JE Colgate from Northwestern University, USA and Mitsuo Kawato of ATR in Japan. Professor Burdet’s group uses an integrative approach of neuroscience and robotics to: i) investigate human sensorimotor control, and ii) design efficient interfaces for daily living technology and neurorehabilitation, which are tested in human experiments and commercialised. |

|

|

|

Talk Title Feeling the Future: Augmenting Medicine with Relocated Haptic Feedback in Extended Reality Environments Talk Description Extended reality, encompassing virtual reality, augmented reality, mixed reality, and everything in between, offers promising opportunities across various fields, including medicine and healthcare. Traditional medical training methods rely on expensive and often limited models, or direct practice on patients, posing ethical and safety concerns. Medical simulations in virtual environments provide a safer and more flexible alternative, allowing trainees to practice with virtual or “mixed reality” patients and receive feedback on their interactions. However, the absence of haptic feedback hampers the immersive experience. Wearable haptic devices can enhance realism and training effectiveness. By relocating haptic feedback from the fingertips to the wrist, trainees can interact with physical tools while receiving supplemental haptic feedback, improving realism and enabling unencumbered interactions with tools and patients. This talk will explore the importance of relocated haptic feedback in medical training, and its applications to enhance realism and facilitate skill refinement in extended reality environments. Speaker Bio Jasmin holds an SB in Mechanical Engineering from MIT and an MS in Mechanical Engineering from Stanford University. She is currently working on her Ph.D. in Mechanical Engineering at the Collaborative Haptics and Robotics in Medicine (CHARM) Lab under Prof. Allison Okamura. Jasmin’s research focus is on relocated haptic feedback for wrist-worn tactile displays, aiming to optimize haptic relocation from fingertips to wrist for virtual interactions. Ultimately, she seeks to inform design decisions for wearable haptic devices and haptic rendering cues in extended reality environments. |

|

|

|

Talk Title Robotic Leg Control: From Artificial Intelligence to Brain-Machine Interfaces Talk Description One of the grand challenges in human locomotion with robotic prosthetic legs and exoskeletons is control - i.e., how should the robot walk? In this talk, Dr. Laschowski will present his latest research on robotic leg control, ranging from autonomous control using computer vision and/or reinforcement learning to neural control using brain-machine interfaces. One of the long-term goals of his research is to conduct the first experiments to study, along this spectrum of autonomy, what level of control do individual users prefer, which remains one of the major unsolved research questions in the field. Speaker Bio Dr. Brok Laschowski is a Research Scientist and Principal Investigator with the Artificial Intelligence and Robotics in Rehabilitation Team at the Toronto Rehabilitation Institute, Canada’s largest rehabilitation hospital, and an Assistant Professor in the Department of Mechanical and Industrial Engineering at the University of Toronto. He also works as a Core Faculty Member in the University of Toronto Robotics Institute, where he leads the Neural Robotics Lab. His fields of expertise include machine learning, computer vision, neural networks (biological and artificial), human-robot interaction, reinforcement learning, computational neuroscience, deep learning, and brain-machine interfaces. Overall, his research aims to improve health and performance by integrating humans with robotics and artificial intelligence. |

|

|

|

Talk Title Soft Wearable Robot for Improving Mobility in Individuals with Parkinson’s Disease Talk Description Freezing of gait (FoG) is a profoundly disruptive phenomenon that significantly hastens disability in individuals with Parkinson’s disease (PD). Described as a feeling of feet getting glued to the floor, this paroxysmal motor disturbance halts walking against one’s intention. While there are a range of therapies attempting to manage FoG, the benefits are modest and transient, resulting in a lack of effective treatments. In this presentation, I will introduce a soft hip flexion exosuit that has the potential to avert FoG in PD. Speaker Bio Dr. Jinsoo Kim earned his B.S. and M.S. in Electrical Engineering and Computer Science from Seoul National University (SNU). He then pursued his graduate studies at Harvard University, where he completed his Ph.D. and S.M. in Engineering Sciences. Following this, he served as a postdoctoral scholar in Mechanical Engineering at Stanford University. He is now an assistant professor in the Department of Electrical and Computer Engineering at SNU. His research focuses on enhancing mobility using wearable robots for both healthy individuals and those with gait disabilities. |

|

|

|

Talk Title Towards Human-Centered Robotics Talk Description Neural Signal Operated Intelligent Robots (NOIR), a general-purpose, intelligent brain-robot interface system that enables humans to command robots to perform everyday activities through brain signals. Through this interface, humans communicate their intended objects of interest and actions to the robots using electroencephalography (EEG). The novel system demonstrates success in an expansive array of 20 challenging, everyday household activities, including cooking, cleaning, personal care, and entertainment. The effectiveness of the system is improved by its synergistic integration of robot learning algorithms, allowing for NOIR to adapt to individual users and predict their intentions. This work enhances the way humans interact with robots, replacing traditional channels of interaction with direct, neural communication. Speaker Bio Ruohan is a postdoctoral researcher at Stanford Vision and Learning Lab (SVL), as well as a Wu Tsai Human Performance Alliance Fellow. He works on robotics, human-robot interaction, brain-machine interface, neuroscience, and art. He is currently working with Prof. Fei-Fei Li, Prof. Jiajun Wu, and Prof. Silvio Savarese. He received his Ph.D. from The University of Texas at Austin, advised by Prof. Dana Ballard and Prof. Mary Hayhoe. |